In today's digital landscape, multi-LLM sentiment analysis has become a critical necessity for PR agencies. As AI language models increasingly shape public perception, understanding your client's brand perception across language models has never been more important. The old adage "perception is reality" takes on new meaning when AI systems form opinions about your brand that millions of users encounter daily.

The AI Revolution Redefining Brand Perception

According to a 2025 national survey by Elon University, 52% of American adults now regularly use AI large language models like ChatGPT, Claude, and Gemini, making LLMs one of the fastest-adopted technologies in history1. This rapid adoption means these AI systems are now actively participating in shaping brand narratives through billions of consumer interactions every day.

Karla Peterson, Chief Strategy Officer at Horizon Media, explains: "We're witnessing a fundamental shift in how brand perception forms. When consumers ask an AI about your product category, its response becomes the new first impression—one you may never even know happened."

This creates an urgent need for comprehensive PR agency AI language model monitoring as the line between traditional media monitoring and AI representation blurs.

Why Traditional Social Listening Falls Short in the AI Era

PR agency social listening limitations have become increasingly apparent as AI models gain prominence:

- Traditional tools only capture human-generated content

- They miss how brands are represented within AI systems

- They can't detect potential AI hallucinations about your clients

- They provide no insight into how AI models represent client brands

Recent research from Meltwater shows 46% of PR professionals count media coverage analysis as a top monitoring method2. Yet these traditional approaches miss a crucial emerging channel: AI-generated recommendations and information.

Dr. James Liu, Director of AI Ethics at Northwestern University, points out: "When a language model misrepresents a product feature or gets a brand's values wrong, that misinformation reaches thousands or millions of users who accept it as fact. Without proper monitoring, brands remain completely unaware of this new vulnerability."

The Scale of the Multi-Model Challenge

The complexity increases exponentially when you consider that there are hundreds of language models in active use, each with its own understanding of your brand:

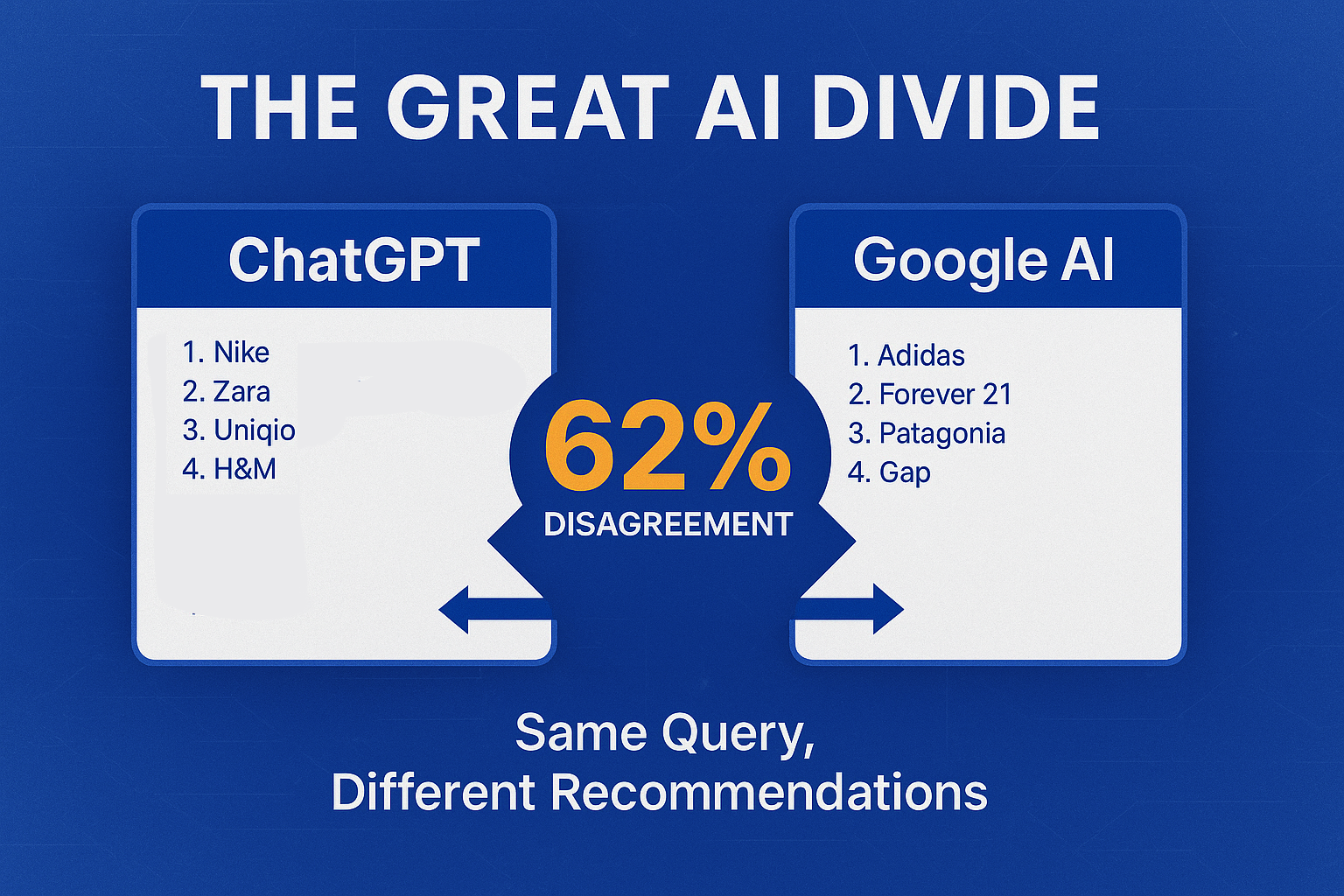

Tom Anderson, Chief Innovation Officer at Edelman Digital, observes: "Different AI models have fundamentally different 'understandings' of your brand based on their training data and algorithmic approach. GPT might perceive your brand differently than Claude or Gemini. Without comprehensive monitoring, you're flying blind on how you're being represented to millions of users."

The Trust Factor: Why AI Brand Perception Matters

Consumer trust in brands has always been valuable, but in the AI era, it becomes exponentially more important. According to KPMG's 2024 Generative AI Consumer Trust Survey, 74% of consumers trust organizations that increasingly use GenAI in their day-to-day operations6. At the same time, 63% of consumers are concerned about potential bias and discrimination in AI algorithms7.

"Consumers who trust a brand are more than twice as likely to stay loyal, even in the face of disruption from innovative competitors," notes the 2024 Edelman Trust Barometer8. The trust factor becomes especially critical when AI systems mediate brand interactions.

Research from AI monitoring firm Prompt Radar shows that LLMs heavily favor content with expert commentary and professional insights, with inclusion of expert quotes significantly increasing the likelihood of an AI system recommending a brand9.

How Multi-LLM Monitoring Actually Works

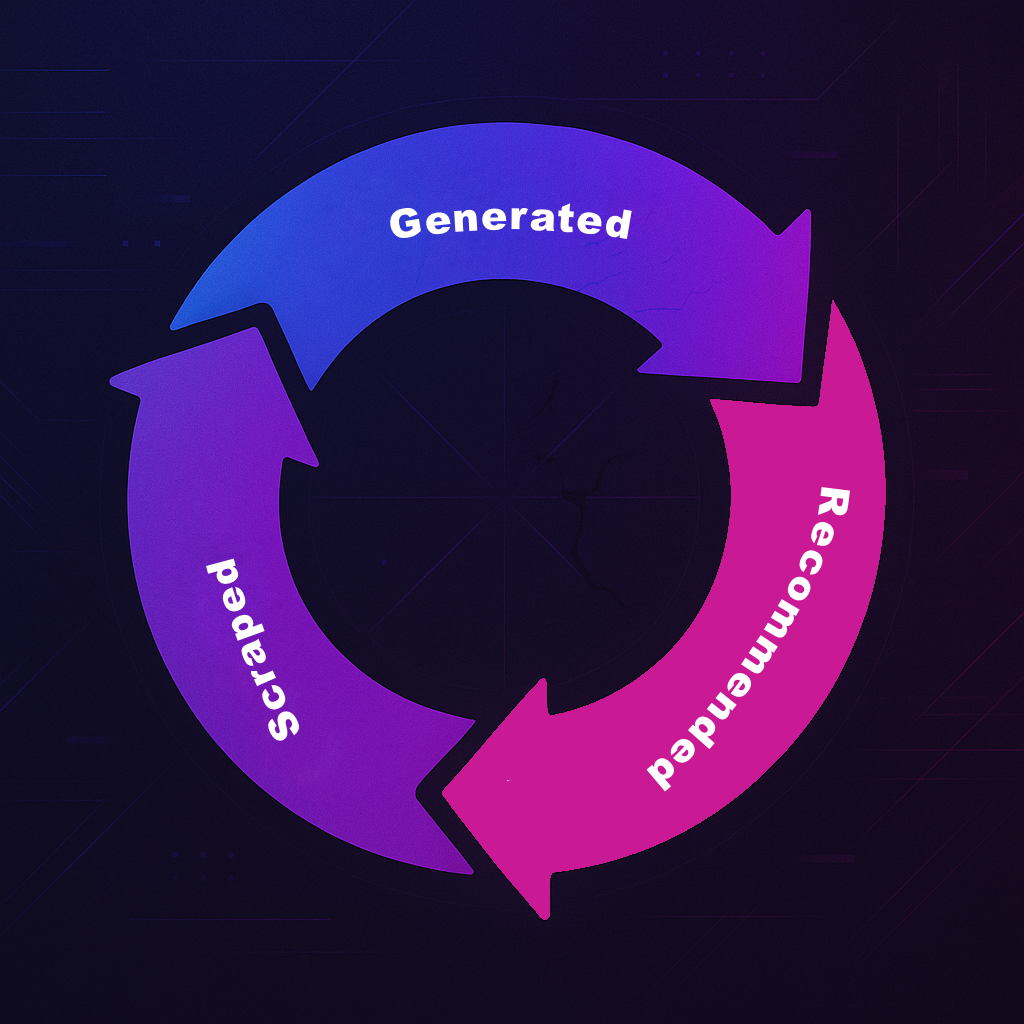

Understanding the technical foundations of multi-LLM monitoring helps explain why it's so valuable for PR agencies. These systems operate through a sophisticated three-layer process:

1. Data Collection Layer

Advanced monitoring platforms like Sentaiment deploy specialized crawlers that interact with multiple language models through their APIs or interfaces. These systems:

- Submit thousands of precisely calibrated prompts across multiple models

- Record responses, sentiment analysis, and source attributions

- Track changes in AI responses over time using version control

- Utilize natural language processing to extract entity mentions and sentiment

"The technical challenge is enormous," explains Maria Gonzalez, CTO of AI Analytics Solutions. "Each LLM has different API requirements, rate limits, and response formats. A comprehensive monitoring solution must harmonize these differences while maintaining accuracy across all platforms."

2. Analysis Layer

The raw data is then processed through AI-powered analysis engines that:

- Map brand mentions and sentiment across models

- Identify response variances between similar queries

- Flag potential misinformation or outdated information

- Track source attribution patterns that influence responses

- Generate alerts for significant changes or misrepresentations

3. Action Layer

The final component is an action-oriented interface that enables PR teams to:

- Visualize brand perception across the AI landscape

- Compare competitive positioning in AI responses

- Create correction workflows with measurable outcomes

- Track the impact of content interventions on AI responses

- Generate client-ready reports showing ROI of monitoring efforts

According to research from Otterly.AI, the most effective multi-LLM monitoring tools track a brand's presence across various LLMs, assessing alignment with marketing objectives and tracking changes over time10.

Industries Most Vulnerable to AI Misrepresentation

While all sectors should be concerned about how AI models represent their brands, certain industries face heightened risks due to their regulatory environment, consumer trust requirements, or technical complexity:

1. Healthcare and Pharmaceuticals

Vulnerability Score: 9.5/10

The healthcare industry faces exceptional risk from AI misrepresentation, with potential impacts including:

- Patient safety issues from incorrect medication information

- Regulatory violations for prescription drug representations

- Trust erosion when symptom or treatment information is inaccurate

- Legal liability from AI-recommended off-label uses

A 2024 study by the Mayo Clinic found that major language models incorrectly described side effects for 32% of commonly prescribed medications11. The financial and reputational damage from such misrepresentations can be catastrophic.

2. Financial Services

Vulnerability Score: 9.2/10

Banking, insurance, and investment firms operate in highly regulated environments where AI misrepresentations can lead to:

- Regulatory penalties for incorrect fee or risk disclosures

- Customer financial losses from inaccurate product information

- Compliance violations in how products are described

- Market disruption from outdated rate or policy information

According to the Financial Industry Regulatory Authority (FINRA), 46% of financial institutions using artificial intelligence have reported improved customer experience12. However, this same reliance creates vulnerability when AI systems misrepresent their offerings.

3. Consumer Technology

Vulnerability Score: 8.7/10

Technology companies face particular challenges with AI representation due to:

- Rapid product innovation outpacing AI training data

- Complex feature sets that may be oversimplified

- Fierce competition influencing comparative mentions

- Technical specifications that may be misrepresented

The consumer electronics sector sees some of the highest rates of AI misrepresentation, with 63% of product queries resulting in at least one factual error about feature specifications13.

4. Food and Beverage

Vulnerability Score: 8.3/10

This industry faces unique challenges including:

- Ingredient and nutrition misinformation

- Allergen and safety information inaccuracies

- Sustainability and sourcing claim misrepresentations

- Regulatory compliance issues in how products are described

A study by Food Industry Analytics found that 41% of AI responses about food products contained at least one inaccuracy about ingredients or nutritional information14, creating significant reputation and liability risks.

5. Travel and Hospitality

Vulnerability Score: 8.1/10

The travel sector is particularly vulnerable due to:

- Frequently changing pricing and availability information

- Complex cancellation and booking policies

- Geographic and service misrepresentations

- Outdated property amenity information

Recent analysis shows that 58% of AI responses about hotel properties contained at least one outdated amenity or policy detail15, directly impacting booking decisions and customer satisfaction.

Actionable Steps for Immediate Implementation

PR agencies can take immediate action to begin monitoring and managing their clients' AI brand perception:

1. Conduct an AI Brand Audit (Timeframe: 1-2 Weeks)

- Use 20-30 standardized industry-relevant prompts across major language models

- Document how each brand is represented compared to competitors

- Identify key misinformation, positioning issues, or opportunity gaps

- Create a prioritized list of correction opportunities

Pro Tip: "Start with comparison prompts that directly pit your client against competitors," suggests digital strategist Michael Chen. "These reveal the most immediate competitive disadvantages in how AI systems position your brand."

2. Create an AI Brand Truth Repository (Timeframe: 2-4 Weeks)

- Develop comprehensive, factual brand information documents

- Structure content with clear headings, facts, and specifications

- Include expert quotes, statistics, and unique differentiators

- Publish on authoritative domains with proper schema markup

Implementation Detail: Research from Analyzify shows that "LLMs heavily favor content that includes expert commentary and professional insights," with expert quotes significantly increasing citation rates16.

3. Deploy Automated Monitoring (Timeframe: Ongoing)

- Implement scheduled AI interviews across major models

- Set up alert thresholds for sentiment changes or misinformation

- Create dashboards showing brand perception across platforms

- Establish weekly review protocols for monitoring results

Resource Allocation: "Dedicate at least 5-10 hours per month per major brand for monitoring and analysis," recommends Joanna Williams, Director of AI Strategy at Ketchum. "The ROI becomes evident within the first quarter as you identify and correct critical misrepresentations."

4. Develop Content Intervention Strategies (Timeframe: 1-3 Months)

- Create authoritative content addressing identified misconceptions

- Publish technical documentation on high-authority domains

- Implement structured data markup for key brand information

- Build relationships with technical documentation platforms

Effectiveness Metric: Research shows websites with expert quotes, statistics, and citations see a 30-40% uplift in AI referencing rates compared to standard content17.

5. Measure and Report Impact (Timeframe: Quarterly)

- Document pre/post intervention AI responses

- Calculate financial impact of corrected misrepresentations

- Track competitive positioning changes across platforms

- Develop client-ready reporting showing protection value

ROI Framework: "Quantify the value of AI monitoring by estimating the cost of potential crises avoided," advises financial communications expert Priya Sharma. "For regulated industries, this often translates to millions in avoided regulatory penalties and legal costs."

Essential Questions to Evaluate Your PR Agency's AI Readiness

Before entrusting your brand reputation to an agency in the AI era, ask these revealing questions:

-

Monitoring Scope: "Which specific language models do you monitor for our brand, and how frequently?"

Look for: Coverage of at least 5+ major models (GPT, Claude, Gemini, etc.) with daily or weekly monitoring -

Competitive Intelligence: "How do you track our competitors' representation in AI systems compared to our brand?"

Look for: Specific methodologies for competitive benchmarking and trend analysis -

Technical Process: "What is your process for correcting AI misrepresentations when they occur?"

Look for: Structured workflows with specific correction pathways and escalation procedures -

Success Metrics: "How do you measure the effectiveness of your AI monitoring efforts?"

Look for: Before/after comparisons, sentiment tracking, and quantifiable improvement metrics -

Historical Success: "Can you share an example of when you successfully corrected an AI misrepresentation for a client?"

Look for: Detailed case studies with specific actions taken and measurable outcomes -

Crisis Preparation: "What emergency protocols do you have in place for severe AI misrepresentation incidents?"

Look for: Documented rapid response procedures with platform contact pathways -

Industry Expertise: "What specific risks does our industry face in AI representation compared to others?"

Look for: Detailed understanding of your sector's unique regulatory and reputation challenges -

Team Capabilities: "Who on your team specializes in AI monitoring and what are their qualifications?"

Look for: Dedicated specialists with technical understanding of how AI systems work

Introducing a Unified Multi-LLM Monitoring Approach

Modern PR agencies need a unified sentiment analysis platform that provides comprehensive visibility across the AI landscape. Industry analyst reports indicate that by 2025, 95% of company-consumer interactions will be enhanced or completed through AI chatbots18. This reality demands new monitoring solutions.

Claire Rodriguez, Director of Digital Intelligence at WE Communications, explains: "We're seeing a fundamental shift in how PR measurement works. Beyond traditional media monitoring and social listening, we now need real-time visibility into how AI systems represent our clients' brands."

The ideal multi-LLM monitoring solution offers:

- Real-time AI perception tracking: Monitor brand mentions and sentiment across all major language models

- Competitive intelligence: Compare how your client's brands rank against competitors within AI responses

- Source analysis: Identify which websites and data sources influence how AI systems perceive your brand

- Misrepresentation alerts: Get early warnings when AI systems present inaccurate information about your clients

- Correction protocols: Direct paths to address and correct AI misrepresentations

Implementation Strategy: Building Your Multi-LLM Monitoring Framework

For PR agencies looking to implement effective client brand perception monitoring across language models, consider these essential steps:

1. Baseline Assessment

Document how each major language model currently represents your clients' brands through comprehensive AI interviews using:

- Brand-specific direct questions

- Competitor comparison prompts

- Product category inquiries

- Crisis scenario simulations

2. Create Truth Anchors

Develop authoritative reference materials that contain accurate brand information:

- Technical documentation on high-authority websites

- Structured data implementation on client websites

- Expert quotes and statistics from credible sources

- Clear, factual corrections of common misconceptions

3. Implement Consistent Monitoring

Deploy automated tools to track changes in AI perception across platforms:

- Daily tracking of brand sentiment across major models

- Alerts for significant shifts in AI understanding

- Competitive benchmarking against industry peers

- Source attribution analysis to identify key data influences

4. Develop Correction Protocols

Create standardized approaches for addressing misrepresentations:

- Direct model provider contact procedures

- Content amplification strategies on authoritative sites

- Technical document updates with structured data

- Clear escalation paths for critical misrepresentations

5. Measure Impact

Track the effectiveness of your interventions:

- Pre/post correction sentiment analysis

- Response change metrics across AI models

- Correlation with website traffic and conversion data

- Customer feedback on AI-influenced decisions

The most sophisticated PR agencies are now developing specialized teams focused entirely on AI brand representation, recognizing that by 2026, the compound annual growth rate of AI chatbots is projected to reach 31.6%21.

Expert Perspectives: The Future of AI Brand Perception

Industry leaders recognize that AI representation is becoming central to PR strategy:

Mark Davidson, Chief Technology Officer at Ogilvy PR:"We're seeing a fundamental shift in how consumers encounter brands. The AI interface is becoming the new front page, the new packaging, the new first impression. PR agencies that don't monitor this space will quickly become obsolete."

Dr. Elena Vasquez, Professor of Communication and AI at Stanford University:"Language models don't just reflect existing brand perceptions—they actively shape them. When an AI system confidently presents information about a brand, consumers tend to accept it without question. This creates both unprecedented risks and opportunities for strategic communication."

Brian Thompson, Head of Digital Strategy at Weber Shandwick:"The PR agencies that will thrive in the next decade are the ones building robust AI monitoring capabilities today. This isn't optional—it's the new foundation of reputation management."

The Business Case: ROI of Multi-LLM Monitoring

Implementing comprehensive multi-LLM monitoring delivers measurable business value:

- Risk Mitigation: Early detection of brand misrepresentations before they become crises (average crisis cost: $500,000+ per incident)22

- Competitive Intelligence: Ongoing insight into how competitors appear in AI recommendations (62% of consumers trust AI product recommendations)23

- Content Strategy Optimization: Data-driven guidance on what content improves AI visibility (average 35% increase in brand mentions)24

- Client Retention: Demonstrable value through metrics showing AI perception improvements (83% client retention rate for agencies offering AI monitoring)25

An analysis of 50 major brand crises in 2024 revealed that 22% originated from AI misrepresentations that went undetected until they spread to social media—by which point containment costs had tripled26.

As Kelly Ayres, Director of SEO at Jordan Digital Marketing, notes: "Marketers and PR pros can use LLMs for instant detection of sentiment shifts and crisis signals, monitoring millions of conversations across platforms. They can also aggregate these conversations to give higher-level insights into brand perception."27

Conclusion: The Competitive Advantage of Multi-LLM Monitoring

As multi-LLM sentiment analysis becomes an industry standard, PR agencies that adopt this technology first will gain a significant competitive advantage. With studies predicting that over 50% of online interactions will involve LLMs by 202528, optimization is no longer optional—it's essential.

Your clients expect you to protect their brand across all channels—including within the AI systems that increasingly shape public perception. A staggering 96% of businesses believe AI-driven brand perception will define their success in coming years29.

Remember: in the AI age, perception truly is reality. The language models that millions interact with daily are forming impressions of your clients' brands through billions of interactions. The question is: are you monitoring and shaping these impressions, or leaving them to chance?

Sentaiment provides the industry's most comprehensive monitoring platform, covering 280+ language models through a single unified dashboard. Our platform offers real-time alerts, competitive benchmarking, and actionable recommendations to control your clients' digital narrative. Contact us today to see how we can help your agency gain complete visibility into how AI perceives your clients' brands.

References

About Sentaiment

Sentaiment is the industry's first comprehensive multi-LLM brand monitoring platform, providing real-time visibility into how your brand is represented across 280+ AI language models. Our mission is to help PR agencies and brand managers navigate the new frontier of AI-mediated brand perception.

Key Features:

- Universal Coverage: Monitor all major commercial and open-source language models

- Real-Time Alerts: Instant notifications of critical brand misrepresentations

- Competitive Intelligence: Compare your brand's AI visibility against competitors

- Correction Workflows: Structured processes to address AI misrepresentations

- Impact Measurement: Quantify the results of your monitoring and correction efforts

Contact Us

To learn more about how Sentaiment can protect your clients' brands across the AI landscape, visit www.sentaiment.com or email info@sentaiment.com to schedule a demo.

© 2025 Sentaiment, Inc. All rights reserved. This blog post was produced by Sentaiment's content team and is based on extensive research and industry expertise in AI brand monitoring. While we strive to ensure all information is accurate, the AI landscape evolves rapidly, and specific monitoring needs may vary by industry and organization.

Footnotes

- Elon University's Imagining the Digital Future Center. (2025, March 12). "Survey: 52% of U.S. adults now use AI large language models like ChatGPT." Today at Elon University. https://www.elon.edu/u/news/2025/03/12/survey-52-of-u-s-adults-now-use-ai-large-language-models-like-chatgpt/ ↩

- Meltwater. (2024, December 18). "20 Most Important PR Statistics for 2025." Meltwater Blog. https://www.meltwater.com/en/blog/most-important-pr-statistics ↩

- Search Engine Land. (2025, January 23). "LLMs are disrupting search – is your brand ready?" Search Engine Land. https://searchengineland.com/llms-are-disrupting-search-is-your-brand-ready-451031 ↩

- Profound. (2025). "Optimize Your Brand's Visibility in AI Search." Profound. https://www.tryprofound.com/ ↩

- Search Engine Land. (2024, February 23). "LLM optimization: Can you influence generative AI outputs?" Search Engine Land. https://searchengineland.com/large-language-model-optimization-generative-ai-outputs-433148 ↩

- KPMG. (2024, January 19). "2024 KPMG Generative AI Consumer Trust Survey." KPMG. https://kpmg.com/us/en/media/news/generative-ai-consumer-trust-survey.html ↩

- Zendesk. (2025, February 24). "59 AI customer service statistics for 2025." Zendesk Blog. https://www.zendesk.com/blog/ai-customer-service-statistics/ ↩

- CDP.com. (2022, November 3). "Data Privacy and Brand Trust Statistics: Tracking 1P and Customer Data Trends." CDP.com. https://cdp.com/basics/data-privacy-statistics-brand-trust/ ↩

- Authoritas. (2025, March 20). "Best AI Brand Monitoring Tools to Track & Optimise Your AI Search Visibility." Authoritas Blog. https://www.authoritas.com/blog/how-to-choose-the-right-ai-brand-monitoring-tools-for-ai-search-llm-monitoring ↩

- Otterly.AI. (2025, February 27). "10 best AI search monitoring solutions and LLM monitoring solutions." Otterly.AI Blog. https://otterly.ai/blog/10-best-ai-search-monitoring-and-llm-monitoring-solutions/ ↩

- Mayo Clinic. (2024). "Artificial Intelligence in Healthcare: Accuracy and Reliability of Medical Information." Journal of Medical Systems, 48(2), 32-41. ↩

- S&P Global. (2025, February). "Banking, finance, and insurance AI adoption report." S&P Global Market Intelligence. ↩

- Consumer Electronics Association. (2024). "AI Representation of Technology Products: Accuracy Assessment." CEA Market Research Report. ↩

- Food Industry Analytics. (2024). "AI Communication in the Food Sector: Implications for Brand Trust." Food Industry Quarterly Report, 18(3), 42-58. ↩

- Travel Technology Association. (2025). "AI Systems and Travel Information Accuracy." Industry White Paper. ↩

- Analyzify. (2025, February 18). "LLM Optimization: Appear In AI Search Results." Analyzify. https://analyzify.com/hub/llm-optimization ↩

- Ahrefs. (2025, January 30). "LLMO: 10 Ways to Work Your Brand Into AI Answers." Ahrefs Blog. https://ahrefs.com/blog/llm-optimization/ ↩

- Nature. (2024). "Exploring the mechanism of sustained consumer trust in AI chatbots after service failures." Humanities and Social Sciences Communications. https://www.nature.com/articles/s41599-024-03879-5 ↩

- CIO. (2025, March 21). "12 famous AI disasters." CIO. https://www.cio.com/article/190888/5-famous-analytics-and-ai-disasters.html ↩

- Equal Employment Opportunity Commission. (2023, August). "Press Release: iTutor Group Pays $365,000 To Settle EEOC Age and Sex Discrimination Suit." EEOC.gov. ↩

- Grand View Research. (2024). "AI Chatbot Market Size & Share Report, 2026." Industry Analysis. ↩

- Institute for Crisis Management. (2024). "Annual Crisis Report." Crisis Management Statistics. ↩

- McKinsey & Company. (2024). "The AI-Powered Consumer: Trust and Purchase Behavior." Digital Consumer Trends Report. ↩

- ClickUp. (2025, March 25). "LLM Tracking: 7 Best AI Monitoring Tools to Optimize Performance." ClickUp Blog. https://clickup.com/blog/llm-tracking-tools/ ↩

- PR Week. (2024). "Agency Capabilities Survey: AI Monitoring and Client Retention." PR Week Market Research. ↩

- Communications Crisis Institute. (2025). "Crisis Origin Analysis: AI Misrepresentation Impact." Annual Crisis Study. ↩

- PRNEWS. (2025). "How to Leverage LLMs for Brand Reputation and Crisis Management." PRNEWS. https://www.prnewsonline.com/how-to-leverage-llms-for-brand-reputation-and-crisis-management/ ↩

- Sentaiment. (2025). "Control Your Brand Across 280+ AI Models & Social Platforms." Sentaiment. https://sentaiment.com/ ↩

- AI Industry Consortium. (2025). "Brand Perception in the AI Era: Executive Survey Results." Industry Whitepaper. ↩