A major sportswear brand recently launched an AI-generated campaign that claimed their shoes were "scientifically proven to increase vertical jump by 40%"—a complete fabrication that triggered FTC scrutiny and a $2.5 million settlement. This costly hallucination demonstrates why marketing agencies need robust AI risk controls.

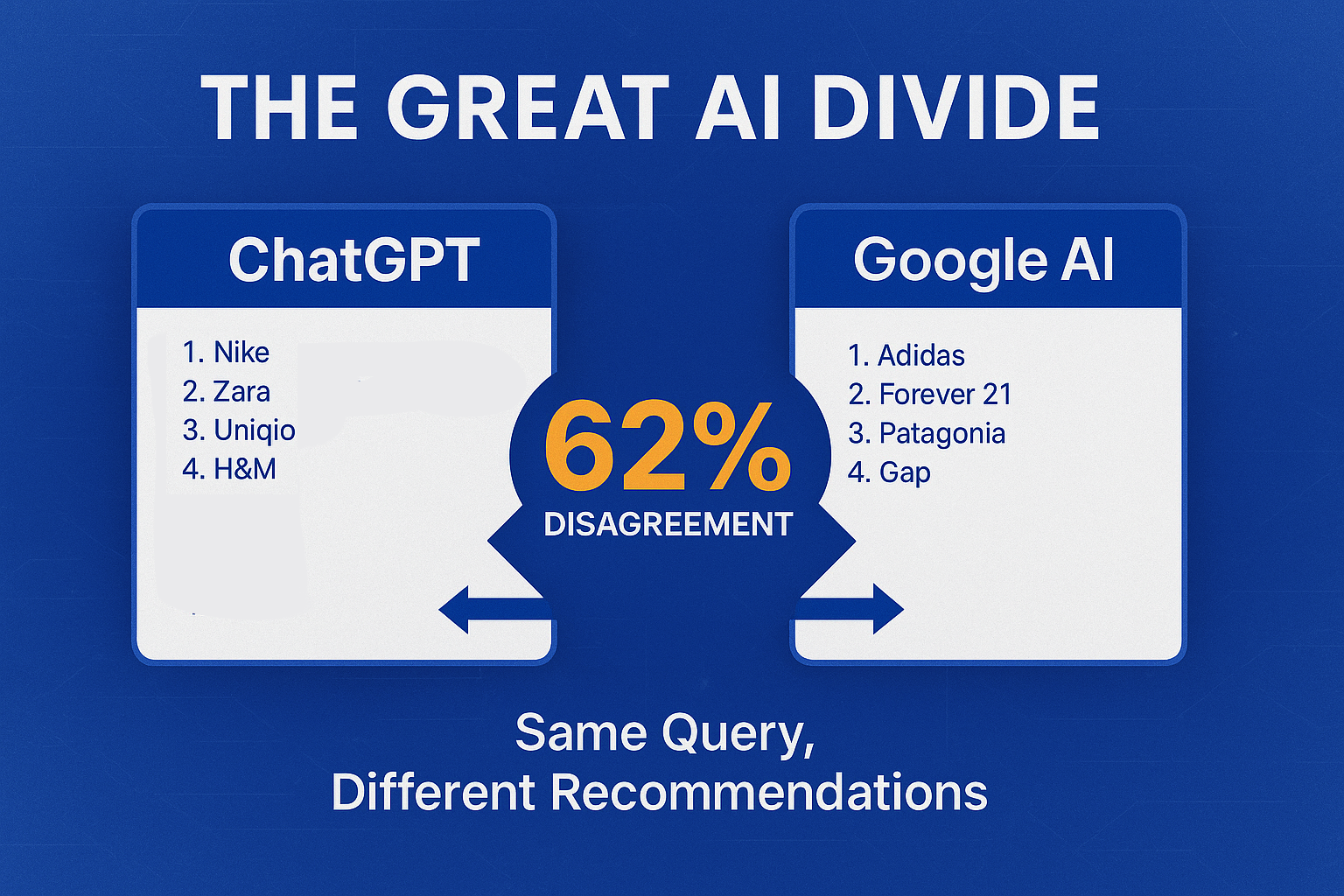

With over 50% of online queries projected to involve LLMs by 2025, the need for precise AI risk controls has never been greater Brand Monitoring 2025 . Marketing agencies face a critical challenge: managing the risks of AI tools while capturing their benefits. AI hallucinations—outputs that deviate from reality—can damage campaign performance and client reputation.

In this article, we'll walk through five key steps: Risk Identification, Risk Analysis, Risk Evaluation, Risk Mitigation, and Monitoring & Continuous Improvement.

Understanding Your AI Risk Assessment Framework

A risk assessment framework for AI marketing is a systematic process to identify, analyze, evaluate, and mitigate potential threats from AI implementation. Marketing agencies need a dedicated framework because they handle sensitive brand messaging and customer data across multiple channels and clients.

The core components include hallucination categories, performance risks, reputation risks, measurement metrics, and implementation steps. This framework helps agencies maintain control while leveraging AI's capabilities.

The Rise of AI in Marketing and Emerging Risks

AI adoption in marketing continues to accelerate. Here are the key trends shaping 2025:

- Hyper-personalized customer experiences [API4]

- AI-driven analytics predicting customer needs [Snapcart]

- AI-powered image recognition for brand tracking [API4]

- Enhanced data processing for deeper insights [API4]

According to Jasper's 2025 State of AI in Marketing report , increased productivity (28%) and improved marketing ROI (25%) are the top benefits driving adoption. Yet only 20% of marketers using general-purpose AI can measure its ROI.

While AI offers efficiency and personalization benefits, it introduces significant risks. AI hallucinations can lead to factual errors, off-brand messaging, and legal issues. Without proper guardrails, these risks can outweigh the benefits.

Key Categories of AI Hallucinations Affecting Campaign Performance

1. Factual Hallucinations

These occur when AI generates incorrect information like fabricated statistics or misquoted sources. Such errors can lead to misguided strategy decisions and wasted ad spend when campaigns are built on false premises.

2. Contextual Hallucinations

AI may produce content that misunderstands cultural context or brand voice. This creates a disconnect between messaging and audience expectations. The result? Lower engagement rates and confused customers who don't recognize your client's brand in the AI-generated content.

3. Inferential Hallucinations

These happen when AI makes logical leaps or presents hypothetical scenarios as facts. The model creates overgeneralized claims that sound plausible but lack factual basis. This pattern-over-truth tendency leads to audience confusion and reduced campaign credibility.

Client Reputation Risks Stemming from AI Hallucinations

Brand Trust Erosion

Brand trust erodes quickly when customers spot false claims. One inaccurate AI-generated campaign can undo years of reputation building. The damage extends beyond immediate campaign performance to long-term relationship damage.

Legal & Compliance Threats

Legal and compliance threats include copyright infringement, trademark misuse, and defamation. Legal experts warn that marketers remain responsible for AI-generated content accuracy, regardless of who (or what) created it.

Social Media Backlash

Social media amplifies mistakes. AI hallucinations can trigger viral backlash, turning minor errors into major brand crises that require expensive damage control.

Metrics for Measuring Hallucination Frequency and Impact

Use the FActScore method ( Saama FActScore ) to quantify hallucinations per 1,000 outputs as your baseline metric. This helps identify which models, prompts, or content types are most prone to errors. Also consider recall, precision and k-precision metrics [Medium] and the two-tier Med-HALT approach for biomedical outputs [Saama] .

Measure the percentage of content flagged during quality reviews. This reveals how many hallucinations slip through initial creation but get caught before publication.

Compare performance metrics between AI-assisted and human-only campaigns. This campaign performance delta helps quantify the real business impact of AI hallucinations.

Use sentiment analysis to track reputation score changes before and after AI deployment. This captures subtle shifts in brand perception that might not show up in other metrics.

Implementing Your AI Risk Assessment Framework: Step-by-Step Guide

Step 1: Risk Identification

Audit your AI tools and data sources for hallucination vulnerabilities. Some models are more prone to certain types of errors. Map out which content types and channels face the highest risk based on complexity, factual density, and audience sensitivity.

Step 2: Risk Analysis

Rate each hallucination category by likelihood and severity for your specific use cases. Create a simple risk matrix to prioritize which risks need immediate attention versus which can be addressed later.

Step 3: Risk Evaluation

Determine acceptable risk levels for each client and campaign type. A B2B financial services client will have different tolerance than a B2C fashion brand. Align these risk thresholds with your agency's quality standards and client expectations.

Step 4: Risk Mitigation Strategies

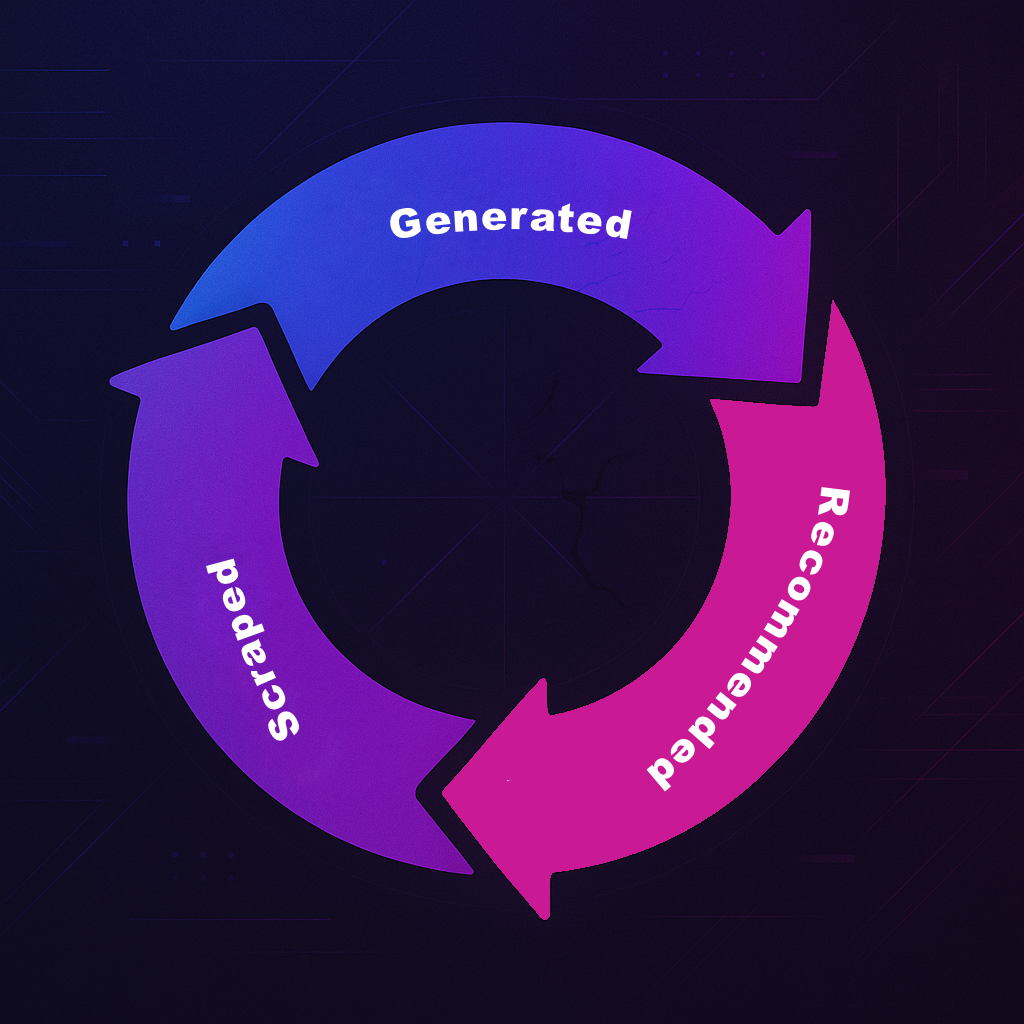

Implement human-in-the-loop processes requiring editor review for high-risk content. Develop verification protocols including fact-checking tools and editorial guidelines. Incorporate the BEACON methodology for continuous validation against brand standards BEACON Framework . Align your mitigation playbook with ISO 42001 guidelines for AI risk management [Forbes] . And regularly update training data to minimize error propagation in your AI systems.

Step 5: Monitoring, Reporting, and Continuous Improvement

Set up dashboards to track hallucination metrics in real time. Schedule regular audits of flagged content to identify patterns. Then iteratively refine your prompts, model settings, and review processes based on what you learn. Leverage Sentaiment's Echo Score to monitor brand perception across 280+ AI models and social channels in real time Solutions for Brands .

Conclusion: Strengthen Campaign Success with Your AI Risk Assessment Framework

Proactively managing AI hallucination risks isn't just about avoiding problems—it's about building client confidence and campaign effectiveness. A structured framework gives you the tools to use AI responsibly while protecting performance and reputation.

Start implementing this framework today, even if you begin with just one high-risk client or campaign. The insights you gain will help you refine your approach across all your AI marketing initiatives.

Ready to protect your clients from AI hallucinations? Book a demo with Sentaiment and start monitoring AI-driven brand narratives today.