The inconvenient truth about Generative Engine Optimization that no one wants to say out loud.

The LLM brand monitoring space is experiencing a gold rush. Companies are raising millions in funding with bold promises: "Track your brand across all AI platforms!" "Optimize how LLMs see your brand!" "Influence ChatGPT responses!"

Here's what they're not telling you: Most of these claims are scientifically impossible.

The current wave of "Generative Engine Optimization" (GEO) companies are making promises they cannot keep and charging premium prices for what amounts to sophisticated guesswork.

It's time to separate the signal from the noise.

The Three Big Lies of GEO

Lie #1: "We Can Influence How LLMs See Your Brand"

The Reality: You cannot directly influence LLM responses about your brand.

Large Language Models like GPT-4, Claude, and Gemini are trained on massive datasets (trillions of tokens) that are essentially frozen at training time.

When you see companies claiming they can "optimize your brand for ChatGPT," they're fundamentally misrepresenting how these systems work. LLMs care about context and recognition, not just links, but the context they understand was determined during training, not through some magical optimization process you can control today.

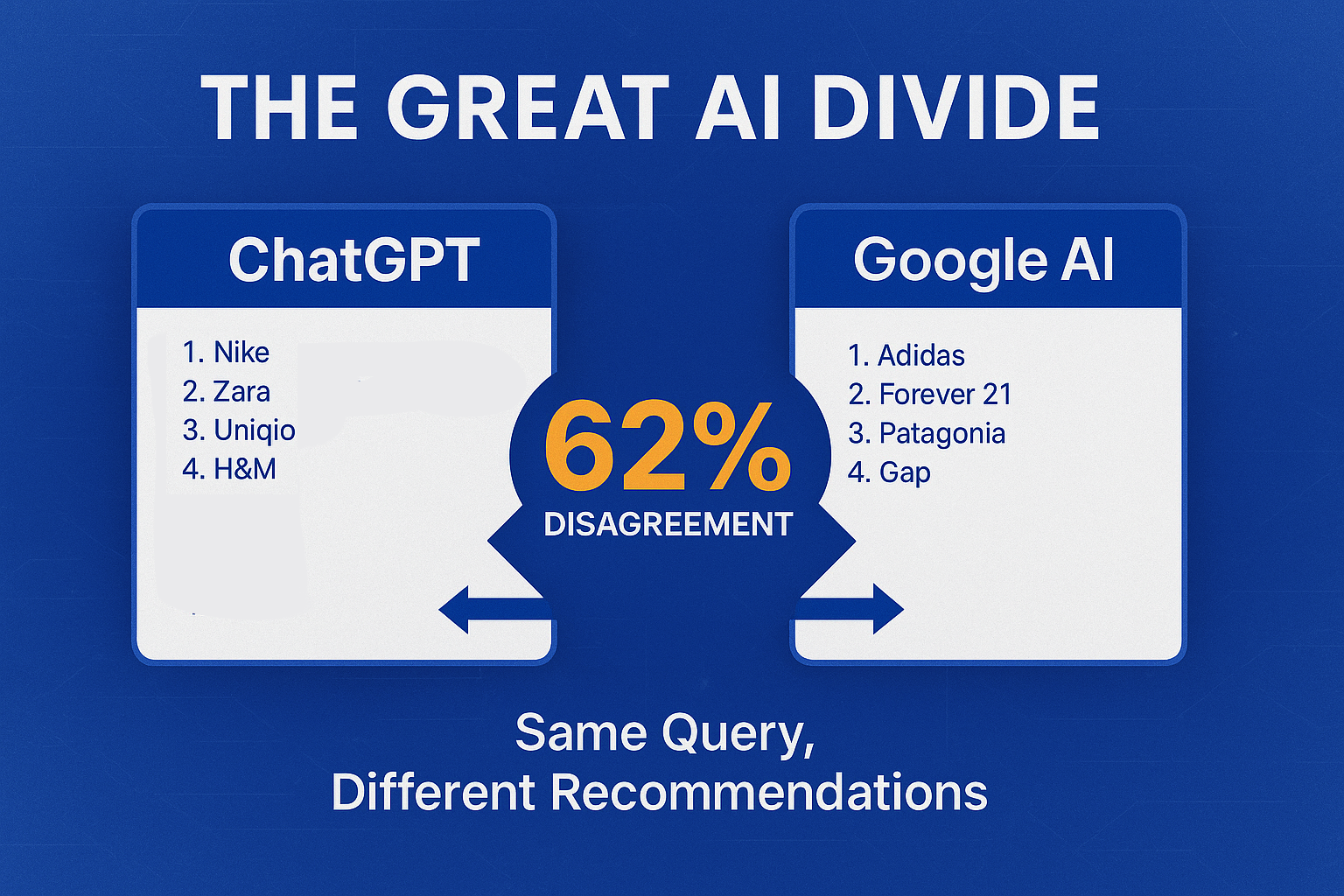

What's Actually Happening: These tools are measuring synthetic responses to predetermined prompts, not actual user interactions. As Andreessen Horowitz notes, these platforms "work by running synthetic queries at scale" and organize outputs into dashboards for marketing teams. Tools track a fixed set of prompts—maybe dozens or hundreds. Real users ask countless variations with nuance.

Lie #2: "Our Tracking Data Is Accurate and Representative"

The Reality: Current LLM monitoring relies on fundamentally flawed synthetic testing.

Here's the dirty secret of the industry: After testing multiple AI monitoring platforms, researchers found they "rely on synthetic data, not real user interactions."

The problems with synthetic testing are well-documented in academic research:

- Limited Coverage: Your tool might check "best B2B payment processor" but miss "cheapest international payment tool for startups" or "How do I automatically send invoices from Stripe to QuickBooks?" These long-tail, high-intent queries often convert better, but synthetic tools miss them entirely

- Inconsistent Results: We observed cases where tools reported brands "ranked #1" for queries, but manual testing showed completely different AI responses. Conversely, brands showed zero mentions in limited prompt sets while appearing frequently in other questions

- False Metrics: One client's "AI visibility score" spiked because a prompt accidentally triggered a known fact about their brand, creating false dominance that real users wouldn't see

Source: Omnius, "How AI & LLM Tracking and Monitoring Tools Really Work"

Lie #3: "We Provide Real-Time LLM Performance Monitoring"

The Reality: LLMs don't provide real usage data, and current tracking methods are estimates at best.

The fundamental problem is data contamination and model opacity. Recent statements about the impressive capabilities of large language models (LLMs) are usually supported by evaluating on open-access benchmarks. Considering the vast size and wide-ranging sources of LLMs' training data, it could "explicitly or implicitly include test data, leading to LLMs being more susceptible to data contamination."

This creates a measurement paradox: Your current measurement tools can't see the biggest growth opportunity in search, and LLMs present information with or without links. Both drive discovery... but there's often "zero attribution to the LLM mention."

Sources: Dong et al., "Generalization or Memorization: Data Contamination and Trustworthy Evaluation for Large Language Models"; Backlinko, "LLM Visibility: The SEO Metric No One Is Reporting On"

The Academic Evidence

The scientific community has been sounding alarms about these exact issues for months:

On Synthetic Testing Limitations: "Your evaluation is only as strong as the data you test on! If the dataset is too simple, too small, or unrealistic, the system might look great in testing but struggle in real use. A high accuracy score means nothing if the test doesn't challenge the system."

Source: Evidently AI, "How to create LLM test datasets with synthetic data"

On Data Contamination:Data contamination (testing and evaluating LLMs using test data which is known to the LLM) "may be a huge problem in NLP, leading to a lot of invalid scientific claims." The worst kind of data contamination happens when "a Large Language Model (LLM) is trained on the test split of a benchmark, and then evaluated in the same benchmark."

Sources: Ehud Reiter, "I'm very worried about data contamination"; Sainz et al., "NLP Evaluation in trouble"

On Measurement Accuracy: "LLM evaluation metrics are extremely difficult to make accurate, and you'll often see a trade-off between accuracy versus reliability."

Source: Confident AI, "LLM Evaluation Metrics: The Ultimate LLM Evaluation Guide"

Why Companies Don't Tell You This

The answer is simple: venture capital pressure and market positioning.

The funding activity shows demand: "Profound raised $20 million in Series A, AthenaHQ is backed by Y Combinator with ex-Google/DeepMind engineers, and Peec AI received seed funding from Antler."

Source: Nick Lafferty, "Ultimate Guide to LLM Tracking and Visibility Tools 2025"

When you've raised millions promising to solve LLM brand monitoring, admitting the fundamental limitations becomes... challenging. It's easier to showcase impressive dashboards, synthetic metrics, and aspirational claims than to explain the scientific constraints.

The Bottom Line: What's Actually Possible (And What's Not)

What GEO Tools CANNOT Do:

- Directly influence LLM responses about your brand

- Provide accurate real-time tracking of LLM mentions

- Guarantee that optimization efforts will change how AIs discuss your brand

- Offer precise metrics on actual user interactions with LLMs

What IS Actually Possible:

- Monitor how your brand appears in synthetic testing scenarios

- Understand patterns in LLM responses to specific prompts

- Track changes over time in a controlled testing environment

- Identify gaps in how your brand is represented

- Take actions that might improve your brand narrative over time

The difference is crucial: monitoring and understanding versus influencing and controlling.

A Better Path Forward

At Sentaiment, we've built our approach on 20+ years of marketing experience and a fundamental commitment to transparency. We don't promise to influence LLMs, we help you understand them.

Our Approach:

- Transparent Methodology: We clearly explain what our testing can and cannot tell you

- Scientific Rigor: Our analysis framework acknowledges the limitations of synthetic testing while maximizing its value

- Honest Metrics: We don't hide behind inflated metrics, we give you actionable insights to get ahead of LLM training within the bounds of what's possible

- Focus on Understanding: Rather than promising influence, we help you understand why LLMs perceive your brand the way they do

What We Actually Deliver:

- Comprehensive perception gap analysis across multiple LLM platforms

- Pattern identification in how your brand is discussed

- Competitive analysis against industry benchmarks

- Strategic LLM specific recommendations for improving your brand narrative overtime

The Call to Action

The LLM monitoring space needs more scientific rigor and less marketing hype and it will get there and evolve over the next 2 to 3 years. Companies deserve tools built on solid methodology, not overreaching promises.

As one industry analysis concluded: "Treat synthetic metrics as directional signals for macro trends, not precise KPIs. Use them for high-level monitoring, not granular decisions."

Questions to Ask Any Brand Monitoring or GEO Provider:

- Can you show me exactly how your synthetic testing works?

- What percentage of real user queries does your prompt set actually cover?

- How do you account for data contamination in your results?

- What are the specific limitations of your methodology?

- Can you guarantee that your optimization recommendations will change LLM responses?

If they can't answer these questions clearly and honestly, you're probably dealing with sophisticated marketing rather than scientific methodology.

Moving Beyond the Hype

The future of brand monitoring in the AI age isn't about controlling or influencing LLMs, it's about understanding them well enough to make informed strategic decisions about how to position and represent your brand.

That requires tools that are transparent about their limitations, and focused on delivering genuine insights rather than impressive-looking dashboards.

The companies promising to "hack" or "optimize" LLMs are selling a fundamental misrepresentation of how these systems work. The real opportunity lies in understanding what LLMs reveal about your brand perception and taking strategic action based on that understanding.

It's time to demand better from the industry. It's time for honest LLM brand analysis.

Want to see what transparent LLM brand analysis actually looks like? Contact our team to learn how Sentaiment's methodology differs from the synthetic testing crowd.

Key Sources

Academic Research:

- Dong, Y., et al. (2024). "Generalization or Memorization: Data Contamination and Trustworthy Evaluation for Large Language Models." arXiv preprint arXiv:2402.15938.

- Sainz, O., et al. (2023). "NLP Evaluation in trouble: On the Need to Measure LLM Data Contamination for each Benchmark." Findings of EMNLP 2023.

Industry Analysis:

- Omnius. (2024). "How AI & LLM Tracking and Monitoring Tools Really Work?"

- McKenzie, L. (2025). "LLM Visibility: The SEO Metric No One Is Reporting On (Yet)." Backlinko.

- Lafferty, N. (2024). "Ultimate Guide to LLM Tracking and Visibility Tools 2025."

Technical Guides:

- Evidently AI. (2024). "How to create LLM test datasets with synthetic data."

- Confident AI. (2024). "LLM Evaluation Metrics: The Ultimate LLM Evaluation Guide."

- Reiter, E. (2024). "I'm very worried about data contamination."