A recent BCG survey revealed that 74% of companies struggle to achieve value with AI adoption, while PwC's research confirms a "trust gap" is holding back executives. With 50% of online queries expected to be AI-driven by 2025, this trust deficit directly impacts adoption rates. Sentaiment serves 15,000 active monthly users with real-time tracking across 20+ LLMs, helping brands course-correct perception before issues arise.

This is where LLM Brand Perception Monitoring comes into play – a critical practice for any organization looking to successfully implement AI solutions.

Understanding LLM Brand Perception Monitoring

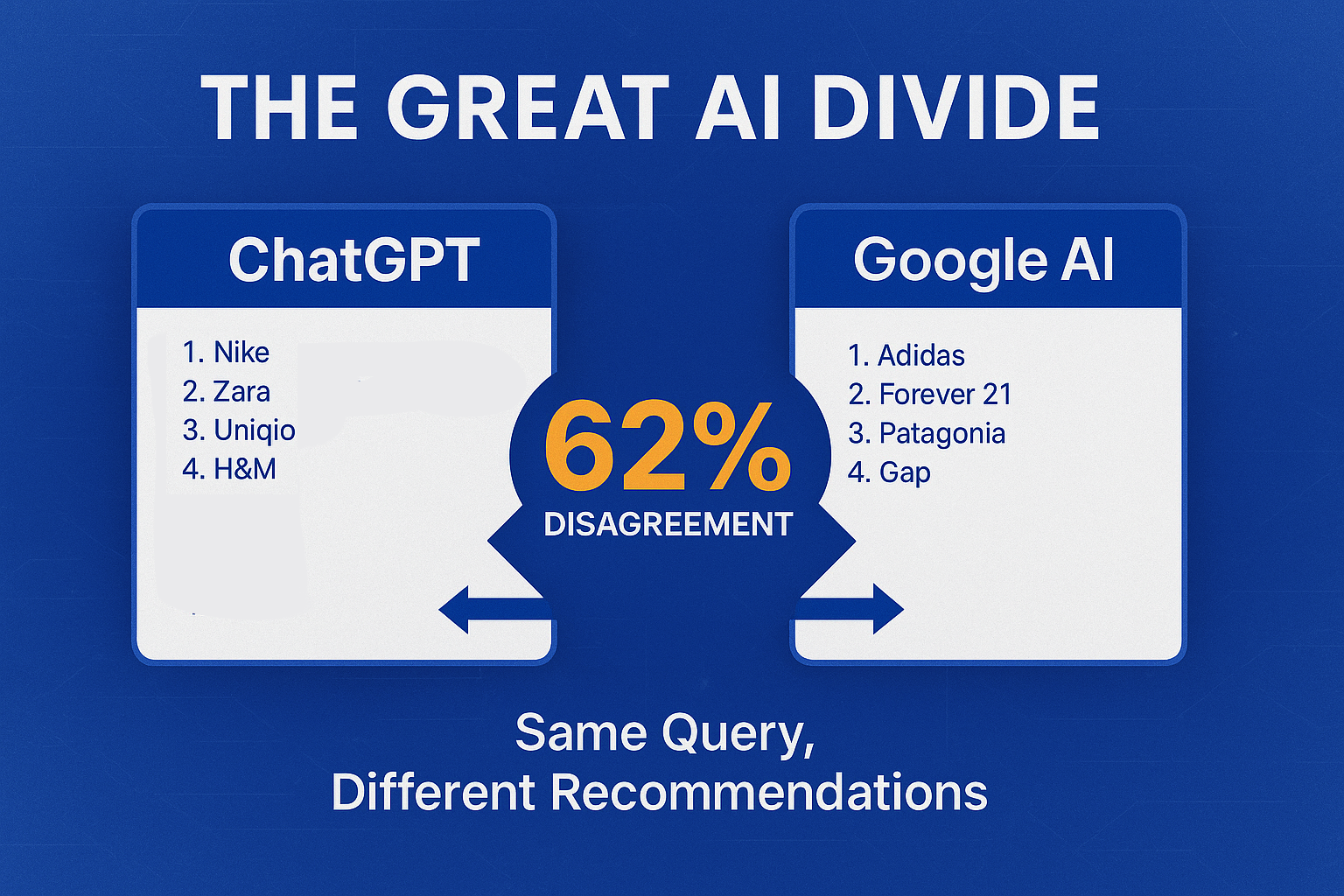

LLM Brand Perception Monitoring goes beyond traditional brand tracking. It focuses specifically on how AI language models like GPT, Bard, and Claude are perceived by users, stakeholders, and the public.

Platforms like LLM Marketing Monitor , Marlon , and the DEJAN methodology show how brands benchmark entity visibility and sentiment across LLMs. On Sentaiment's dashboard ( sentaiment.com ), you can compare sentiment trends for GPT, Bard, Claude and 17 more models side-by-side.

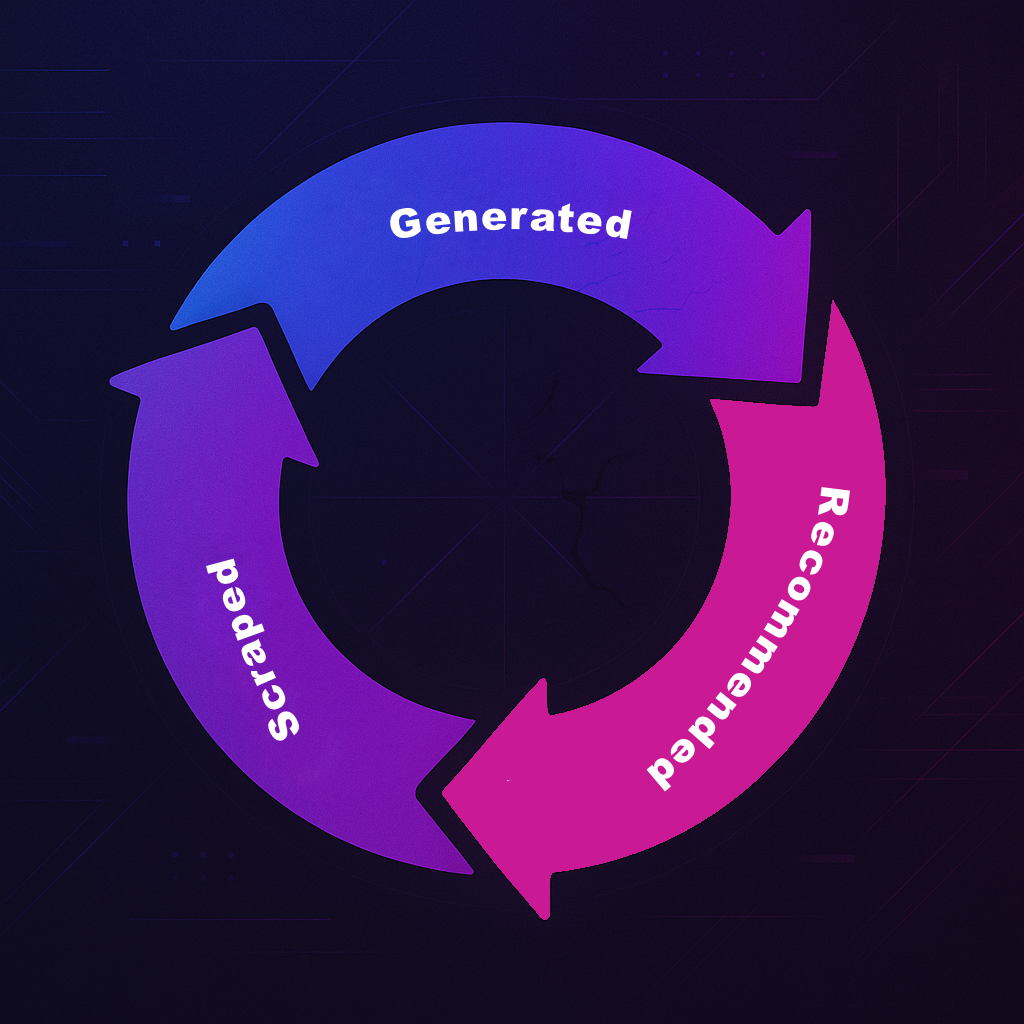

This practice involves systematically tracking user sentiment, media coverage, and stakeholder feedback about your AI models. Unlike general AI branding, LLM perception monitoring focuses on the unique challenges of language models: accuracy, bias, safety, and transparency.

Early insights from monitoring help shape development priorities and communication strategies. They tell you what's working, what's not, and where to focus improvements.

The Role of Public Trust in Driving LLM Adoption

Trust directly correlates with how quickly and widely an LLM gets adopted. According to KPMG's global study , while 66% of people use AI regularly, only 46% are willing to trust AI systems.

Key trust drivers include:

- Data privacy assurances

- Accuracy benchmarks

- Ethical guardrails

- Transparency in operations

A comprehensive study identifies eight trust dimensions —truthfulness, safety, fairness, robustness, privacy, machine ethics, transparency, and accountability—that brands must address. For instance, one major vendor postponed its LLM launch after detecting gender bias in early tests and later applied differential privacy safeguards to regain confidence ( Neptune.ai ).

When users trust your LLM, they're more likely to integrate it into their workflows. But when trust breaks, adoption stalls. This makes monitoring perception not just helpful but necessary.

Reputation Management through Strategic Communication

How you communicate about your LLM shapes public perception. Effective communication strategies include:

- Transparent updates: Regular release notes, safety audits, and open evaluations

- Proactive crisis communication: Prepared statements for potential issues, quick acknowledgment when problems arise

- Multi-channel engagement: Technical blogs, webinars, developer forums, and social media Q&A

Being transparent about limitations builds credibility. And when issues inevitably arise, quick, honest responses prevent minor concerns from becoming reputation crises.

With a 4.9/5 rating and 96% of businesses agreeing that shaping AI brand perception is critical, Sentaiment's real-time dashboards across 20+ LLMs power proactive reputation management.

Key Metrics and Tools for Monitoring LLM Brand Perception

Effective LLM Brand Perception Monitoring combines quantitative and qualitative approaches:

Quantitative Measures:

- Net Promoter Score (NPS) – gauging customer loyalty and satisfaction

- Sentiment analysis scores across platforms

- Share of Voice (SOV) – measuring visibility compared to competitors

Qualitative Methods:

- User interviews and feedback sessions

- Developer community engagement

- Expert reviews and evaluations

Also track Brand Awareness, Volume of Mentions, Brand Loyalty, and Brand Salience for a holistic view of your LLM's perception.

Tools like Sentaiment - Real-Time LLM Optimization & Brand Monitoring provide comprehensive dashboards that track how your brand is represented across multiple AI language models in real-time.

Best Practices for Effective LLM Brand Perception Monitoring

Best practices for monitoring your LLM brand perception include:

- Establish a regular monitoring schedule: daily sentiment checks, weekly stakeholder reports

- Integrate perception insights into product roadmaps

- Train cross-functional teams to interpret metrics and coordinate responses

- Test LLM applications against potential vulnerabilities

- Track essential metrics with robust alerting systems

- Differentiate monitoring from observability by pairing predefined metrics with root-cause insights ( Coralogix guide )

For more on monitoring frameworks and alerting, see WhyLabs' five best practices for monitoring large language models.

And remember: monitoring should scale alongside your models. As your LLM grows in capability and reach, your monitoring systems need to keep pace.

Conclusion: Strengthening AI Adoption through Proactive Brand Perception Monitoring

With the EU AI Act now in force and global AI spending projected to hit $749 billion by 2028, LLM Brand Perception Monitoring has become essential for AI success. Organizations that prioritize trust through systematic monitoring will lead the next wave of adoption.

Ready to take control of your brand's AI perception? Start with Sentaiment today. See how your brand appears across 20+ language models in real-time and shape your AI narrative before others define it for you.